How to Automate Big Data Pipelines with Centralized Orchestration

Learn how to centrally manage and orchestrate the automation required to control and maintain the data pipeline for big data in an enterprise environment with an IT automation platform.

Editor’s Note: Given the continued evolution of IT automation, we thought it timely to refresh our 2020 point of view on data pipeline automation.

Today’s enterprises have a bottomless appetite for analytics and business insights. It’s why investment in big data and IoT projects is skyrocketing. For data and IT Ops teams, that means the need to automate data pipelines across the enterprise has become a top priority.

Yet, capitalizing on investments in data is no slam dunk. For one thing, most enterprises use a wide variety of data tools, most of which don’t work well together without much time and effort from multiple internal and external teams.

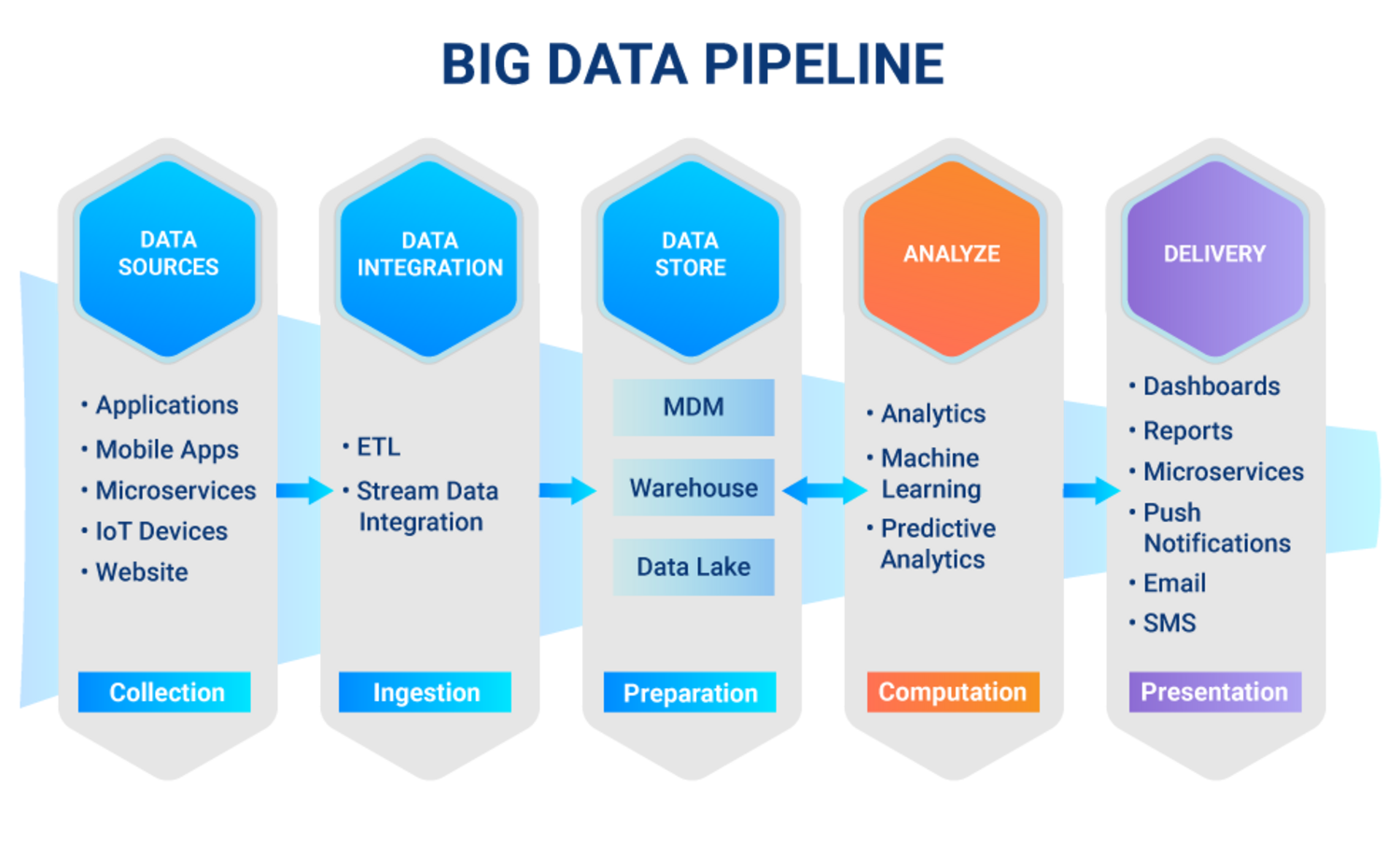

A full data pipeline traditionally passes through several stages. The image below illustrates the stages that data must flow through before it is ultimately refined and delivered to the business. Along this path, there are many open-source or commercial tools that enterprises will leverage.

Traditionally, enterprises attempt to use open source tools, cloud schedulers, or custom scripts to automate end-to-end data pipeline processes. However, this approach is prone to errors, and there is no way to manage or view the entire process centrally. What’s more, it’s almost impossible to maintain, monitor, or scale all of the automation processes across an always changing toolset.

There is, however, an answer: A data pipeline automation solution in the form of an IT automation platform. This type of solution centrally orchestrates every step between the variety of data and analytics solutions within an enterprise environment. But we’ll talk more about that below.

Roadblocks on the way to data pipeline automation

Empowering the enterprise to become truly data-driven is a top mission for most IT Operations teams. When an enterprise isn’t automating its data assets and tools, it’s not making the most of its analytics potential. Failing to automate data means an enterprise is more likely to:

- Frustrate business users by failing to make the leap from pilot to production. Big data projects often get the green light to move to production based on the results of a pilot project. In pilots, however, workflows are often orchestrated with disconnected, manually created scripts that take time to develop and maintain. What happens in production when dozens or even hundreds of applications and platforms need to be accommodated? Too often, the project bogs down because IT Operations doesn’t have the resources to apply the same processes outside of the pilot. Then, business users get frustrated because they’re not getting the analytics they expected.

- Be unable to orchestrate and scale across multiple systems. Accommodating a wide variety of data sources is a significant challenge in linking an organization’s big data tools. At a foundational level, each big data tool has its own way of managing and exchanging data; even with intensive integration and file-transfer management, it’s challenging to bring together all the necessary data into the organization’s pipeline. That means business users aren’t basing their decisions on the full scope of the big data that is—potentially—at their disposal.

- Possess big data without being able to act on much of it. One of the key challenges in managing multiple big data tools and their unique environments is that, without aggregation and processing, the data won’t have the context it needs to be transformed into something actionable. Organizations have numerous development tools they can use, but at this stage, development often slows down. There is a risk of creating islands of automation that aren’t interoperable.

Orchestration and Automation Platform - Complete the Puzzle

Born from workload automation, service orchestration and automation platforms (SOAP) have evolved as the answer to managing big data. With agile integrations, native managed file transfer, and real-time event-based trigger capabilities, SOAPs connect data pipeline tools. A SOAP will orchestrate and automate the entire end-to-end processes while empowering IT Ops with centralized observability and management.

As with almost every other area of IT, workload automation or SOAPs are always evolving. A rapidly growing number of enterprises rely on this class of enterprise-grade IT automation solutions to orchestrate their mission-critical business processes and applications, including data pipeline tools and platforms.

SOAPs orchestrate every aspect of an organization’s data and analytics project, from ingesting data to producing the workflows that process the data and sharing results with business users and other systems.

Take, for instance, Apache Hadoop, a more legacy, but often-used standard framework for processing big data. Hadoop is open-source software that enables distributed processing of large data sets across clusters of commodity servers. Despite the availability of open-source management tools, the siloed nature of these platforms causes big headaches for IT teams.

In addition to Hadoop, other standard solutions used along the data pipeline include data integration solutions like Informatica PowerSuite, business intelligence solutions like SAP BusinessObjects, and dashboarding and reporting tools like Qlick and Tableau.

A key feature of SOAPs are that they are capable of real-time automation across on-premises, cloud, and containers. This core functionality is designed to help enterprises work in hybrid IT environments, which are the most prevalent in today's world. That’s because an automation platform is developed to work across all data tools and platforms regardless of where they are installed.

Why Enterprises Automate Data Pipelines

When an organization automates the big data pipeline, it virtually guarantees efficiency enhancements, including reassigning at least 15–20 percent of engineering staff to more value-adding tasks. And yet, the big win from automating data processes comes from accelerating the implementation of big data projects.

In short, replacing manual scripting and point automation tools with automated workflow management minimizes complexity, shortens development time, and prevents coding errors.

Applying automation gives IT a single-source view of workflows, including an end-to-end view of data pipelines at all stages. IT teams are able to consolidate steps in their processes and streamline the number of workflows.

And finally, automation improves service-level agreement performance. A single-source view empowers IT to identify and correct potential issues before deadlines arrive. Also, because each automated step is visible, enterprises can monitor and quickly root cause errors or failures in the process.

Final Thoughts

As enterprises invest ever greater resources in data projects, it’s vital to recognize the complexities and challenges that data pipelines bring. Workload automation and SOAP platforms are crucial for orchestrating complex data pipelines, which stretch across multiple applications and environments. Enterprise-grade automation solutions offer a reliable, low-risk means of orchestrating data processes.

And of course, if you would like to explore Stonebranch solutions that are designed to help automate the big data pipeline, you can explore our DataOps orchestration solution.

Start Your Automation Initiative Now

Schedule a Live Demo with a Stonebranch Solution Expert