Bridge the On-Premises to Cloud Data Pipeline Gap Using Kubernetes

As enterprises shift from on-premises to cloud environments, some applications continue to run on-prem and others in the cloud. With this evolution comes a dramatic increase in the need to automate complex data pipelines.

Container-based applications are growing in popularity. Often, containerized applications in the cloud need to connect to on-prem platforms or applications. In most cases, creating a sustainable data pipeline that crosses a hybrid IT environment requires highly secure automation.

Kubernetes is designed to help automate, scale and deploy applications running in containers. On its own, however, Kubernetes can be challenging to manage. The complexity of managing Kubernetes is why many organizations layer a managed container platform service (like Red Hat OpenShift) on top of their Kubernetes platform.

By itself, Kubernetes with a managed container platform does not address the challenge of creating a secure data pipeline between on-premises mainframe and distributed servers to the cloud. This challenge is especially relevant for organizations that store data on-premises that is regularly used by applications in the cloud (or vice-versa). Read on to learn how enterprises are solving this challenge today.

Creating a Secure Data Pipeline with Workload Automation (WLA) and Managed File Transfer

As organizations expand their use of container-based applications across hybrid IT environments, workload automation has become a go-to solution. A WLA solution, with built-in managed file transfer capabilities, bridges the gap between on-prem and cloud by making sure:

- Data moves to the right place.

- Data is synchronized between environments.

- Data is moved at the right time, whether in batch or in real-time.

- Data is secure during its transfer.

Workload automation solutions leverage agents, which are small bits of code that are deployed within your applications, platforms or containers. Among other things, agents connect to one another via encrypted security. This connection creates the pathway between any application or platform within your hybrid IT environment. Managed file transfer is then employed to move the data between these connection points.

Because a WLA solution is built around scheduling, enterprises typically create scheduling workflows to orchestrate how data will move. These workflows will include the movement of data but may also include additional automated jobs or tasks. This is especially relevant as so many cloud-based containerized environments are transient in nature: the applications are spun up, perform a task and are then deactivated and disappear.

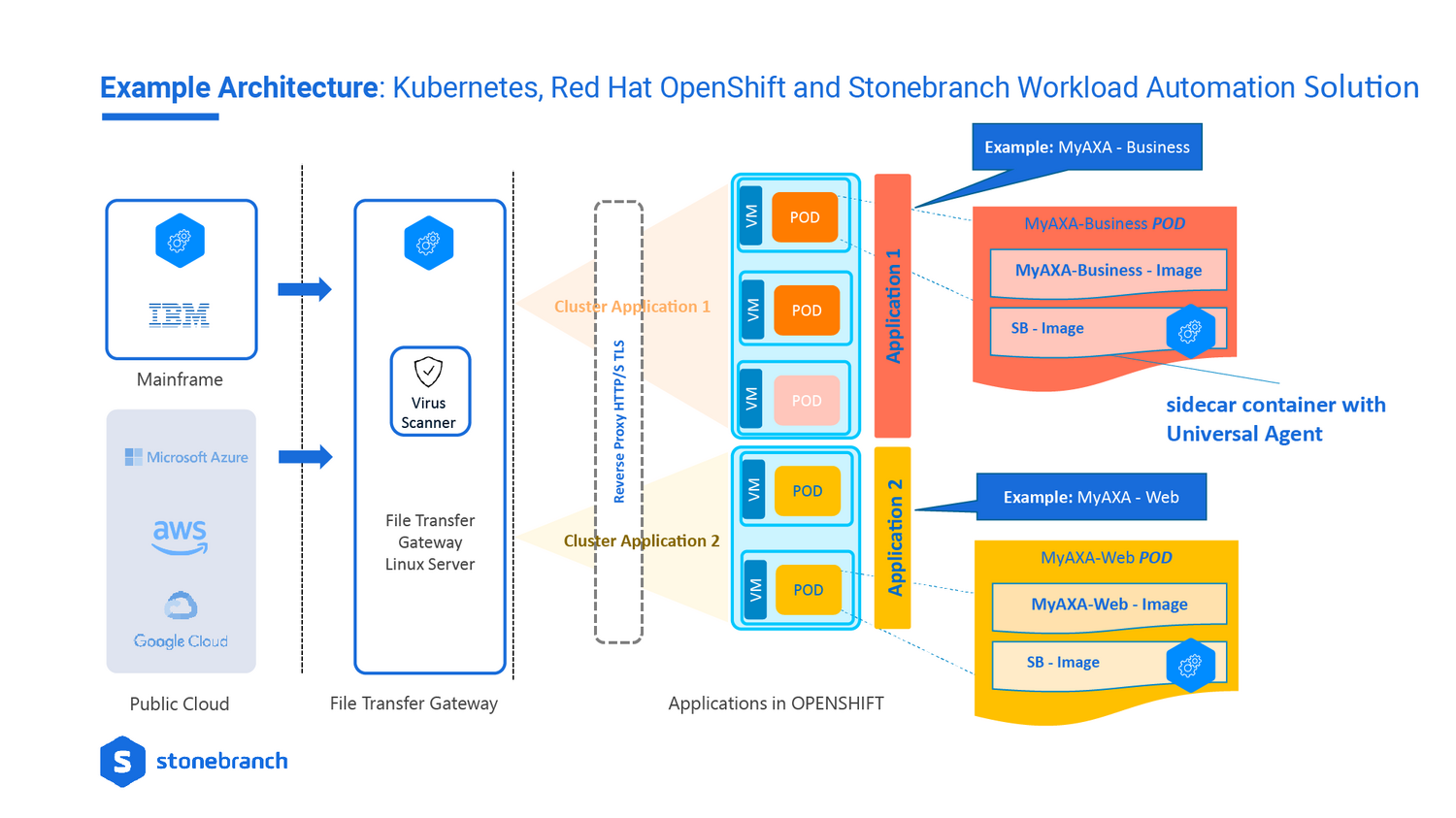

Example Architecture

Below, you will see one way to deploy the combination of Kubernetes, Red Hat OpenShift and Stonebranch’s workload automation solution. This example comes from the architecture that AXA Switzerland has deployed. As you will notice, they have connected applications running on IBM mainframe to applications running in AWS, Azure and Google Cloud services.

The Must-Haves for Orchestrating Data in the Cloud

When organizations deploy their applications in hybrid IT environments, it’s essential to ensure they can:

- Connect and manage their on-premises and cloud platforms and applications.

- Securely send data via managed file transfer between on-premises and cloud environments and between different cloud providers.

- Perform these transfers in real-time, using a scheduler.

- Maintain control and full visibility from a single platform.

Summary

Using container technology is a way to help manage and simplify an enterprise transition between on-premises and cloud environments. Of course, using containers can be tricky. Like anything in life, once you have the right tools in place, you can accomplish just about anything. By combining Kubernetes with Openshift and Stonebranch, enterprises have demonstrated that they can create and sustain a scalable data pipeline.

Next Steps in Bridging the On-Prem to Cloud Divide:

AXA Switzerland was kind enough to join Stonebranch during a recent web seminar. Be sure to check out the on-demand recording here: How to Use Kubernetes to Orchestrate Managed File Transfers within a Hybrid IT Environment.

Within the on-demand webinar, you’ll see a demo that illustrates how to:

- Create an Agent-Cluster in Universal Controller for each OpenShift application.

- Add the Universal Sidebar container to the application deployment script.

- Configure the file transfer workflow using the Universal Controller Web user interface.

Additionally, be sure to take a look at this detailed solution document about Stonebranch’s Red Hat–certified OpenShift automation solution.

Start Your Automation Initiative Now

Schedule a Live Demo with a Stonebranch Solution Expert