What is a DataOps Tool: Five Core Capabilities

DataOps tools are part of an emerging technology category that helps organizations streamline data delivery and improve productivity with process integrations and automations.

In December 2022, Gartner® published their first Market Guide for DataOps Tools. Though the DataOps category has existed for years as a methodology, this guide identifies the DataOps Tools market as an emerging class of technology. In 2024, Gartner released an evolved update to their original report that goes even deeper into the various tools used to automate and orchestrate the entire data pipeline — from data collection and ingestion to transformation and delivery.

What is a DataOps Tool?

The goal of DataOps is to automate and optimize the data management process from end to end. DataOps tools help optimize the cost of data management by minimizing the need for manual intervention.

“By 2026, a data engineering team guided by DataOps practices and tools will be 10 times more productive than teams that do not use DataOps.”

— 2024 Gartner Market Guide for DataOps Tools

For data and analytics (D&A) leaders who want to boost operational excellence and productivity, Gartner recommends using a DataOps tool to combine data management tasks handled by multiple technologies into a comprehensive data pipeline lifecycle.

Who is DataOps for?

While the primary buyers of DataOps tools are D&A leaders — such as chief data and analytics officers (CDAOs) — the primary users are data managers and data consumers:

- Data managers include data engineers, operations engineers, database administrators, and data architects

- Data consumers include business analysts, business intelligence developers, data scientists, and business technologists (line-of-business users who are domain experts).

DataOps tools give a unified experience to both data managers and consumers to drive productivity and operational excellence.

“DataOps tools eliminate various inefficiencies and misalignments between data management and data consumption by streamlining data delivery processes, aligning data team personas and operationalizing data artifacts (processes, pipelines and platforms) thereby accelerating the delivery of business benefits and outcomes."

— 2024 Gartner Market Guide for DataOps Tools

Here at Stonebranch, we also see interest in DataOps from traditional IT Ops leadership, in addition to D&A leaders. The driver is to deliver automation as a service. When IT Ops is involved, it’s typically in partnership with the data teams. In this scenario, IT Ops gains visibility and governance, while data teams are empowered to focus on innovation.

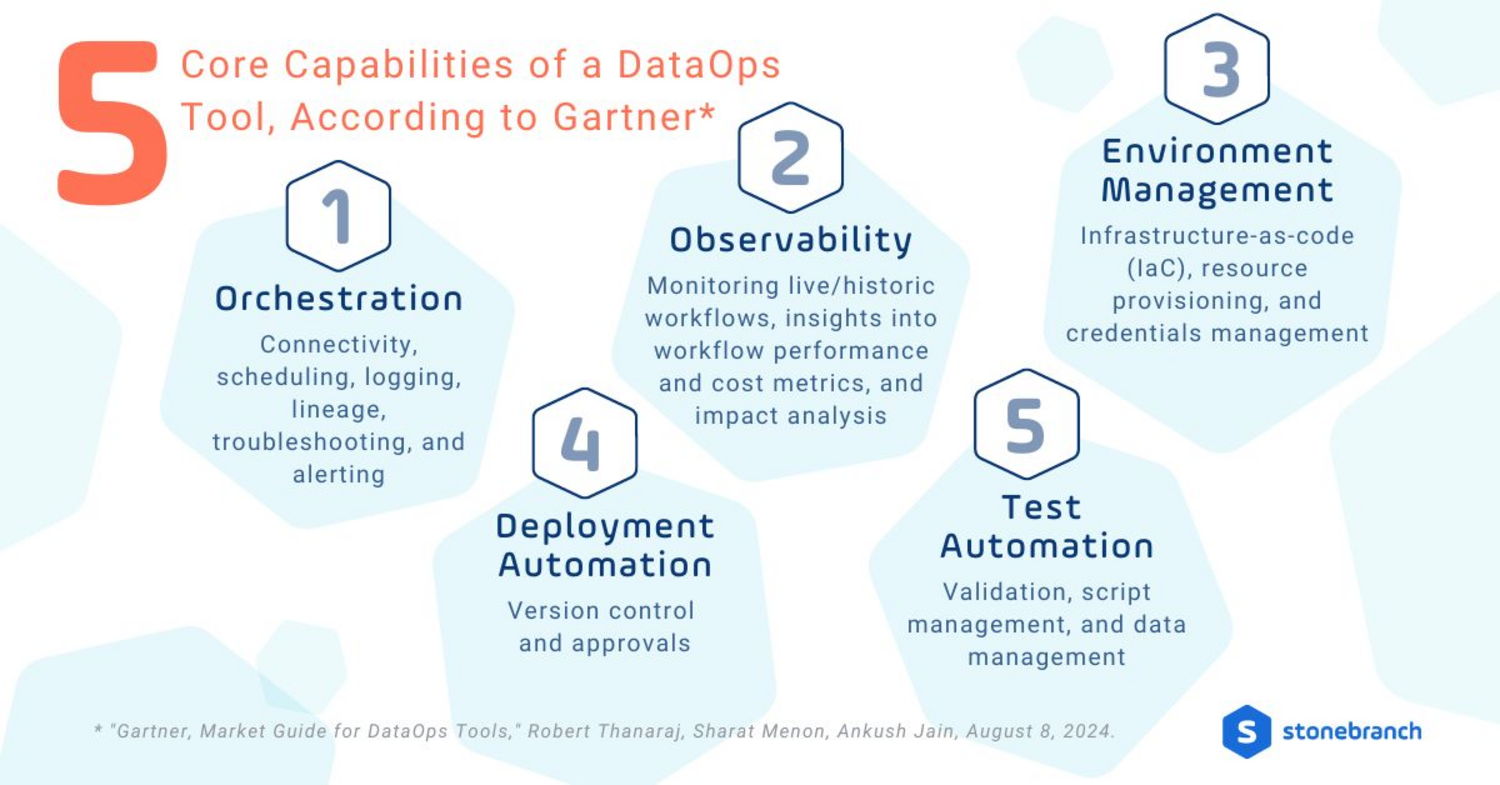

The 5 Core Capabilities of a DataOps Tool, According to Gartner*

DataOps platforms offer powerful automation and agility across the full data transformation lifecycle. Five key features unite all the best DataOps tools:

- Orchestration capabilities include connectivity, scheduling, logging, lineage, troubleshooting, and alerting

- Observability enables monitoring live/historic workflows, insights into workflow performance and cost metrics, as well as impact analysis

- Environment Management features cover infrastructure-as-code (IaC), resource provisioning, and credential management

- Deployment Automation entails version control and approvals

- Test Automation provides validation, script management, and data management

Each of these capabilities is designed to streamline real-time data operations and accelerate success for D&A teams. Orchestration and observability are called out as must-have DataOps features to enable the end-to-end flow of data.

Gartner’s Top 3 Recommendations

D&A leaders should keep the following in mind when looking to improve data team performance and remediate operational concerns:

- Evaluate DataOps tools based on key capabilities such as connectivity to your data stack (existing and expected), workflow automation, and release automation.

- Prioritize tools that provide a unified view of workloads across your hybrid IT data stack, while also offering features such as lineage, cataloging, resource provisioning, and environment management.

- Focus on benefits when introducing your selected tool to data managers and consumers. Data managers will be most interested in the ability to monitor workflows and receive proactive alerts; data consumers will appreciate reduced cycle times to access data and improved data integrity.

Putting the Ops in DataOps with Stonebranch

Stonebranch is named as a representative vendor in the 2024 Market Guide for DataOps Tools for two products: Universal Automation Center (UAC) and Universal Data Mover Gateway (UDMG).

UAC specializes in centralizing control of IT automation processes across enterprise-wide data pipelines and toolchains. The platform easily integrates with data pipeline-centric tools, including ETL tools, as well as data lake, data warehouse, and visualization tools. An in-built component of UAC, UDMG is a B2B managed file transfer (MFT) platform that manages the flow of business-critical data between you and your external business partners, vendors, and suppliers.

Together, UAC and UDMG are ideal for D&A teams who:

- Want to keep using existing data tools but are ready to graduate from open-source schedulers to enterprise-grade platforms.

- Would like a single platform to connect data teams with developers, IT Ops, and CloudOps teams — to help scale their data program.

- Need to operationalize dev/test/prod DataOps lifecycle management methodologies to gain speed and improve data quality.

- Want to use OpenTelemetry standards to gain full observability across the entire pipeline and optimize uptime with workflow monitoring and proactive alerts

- Have a growing or changing data tool landscape, thus requiring the ability to rapidly build new integrations (or download pre-existing integrations).

- Need to enable data scientists or business technologists with simple self-service capabilities via the platform or third-party tools like ServiceNow, Microsoft Teams, or Slack.

UAC is designed for today’s challenges — and for whatever comes next. To learn more, browse through our customer success stories or reach out to the Stonebranch sales team for a demonstration of the platform.

* "Gartner, Market Guide for DataOps Tools," Robert Thanaraj, Sharat Menon, Ankush Jain, August 8, 2024.

Frequently Asked Questions

What is a DataOps Tool?

A DataOps tool is a software solution designed to streamline and enhance the data operations processes within an organization. It facilitates collaboration between data teams, including data engineers, data analysts, and data science professionals, by automating workflows and integrating various data sources. As the demand for real-time data analytics and big data solutions increases, the decision to implement DataOps strategy — as well as a robust DataOps platform — becomes critical for organizations aiming to harness their data assets efficiently.

What are the Five Critical Data Capabilities of DataOps Tools?

The five critical capabilities of DataOps tools include data integration, data quality, data orchestration, data governance, and data monitoring. These capabilities ensure that organizations can manage their data effectively, ensuring high data quality and seamless data flow across various data environments. Each capability plays a vital role in enabling efficient data processes and enhancing overall data management.

How Does Data Quality Affect DataOps?

Data quality is a cornerstone of successful DataOps practices. Poor data quality can lead to inaccurate data analysis and flawed decision-making. Organizations must focus on implementing data validation techniques and data profiling tools to ensure data entering into their data pipelines meets quality standards from the moment of data ingestion. This minimizes risks and boosts confidence in data analytics outcomes.

What Role Does Data Integration Play in DataOps?

Data integration is crucial for unifying various data sources into a cohesive system. Organizations will need data integration tools that can handle complex data environments, allowing for seamless merging of data assets from cloud data, data lakes, and data warehouses. This capability enables real-time data access and enhances the overall data management.

Start Your Automation Initiative Now

Schedule a Live Demo with a Stonebranch Solution Expert